The circuit breaker pattern is a resilience strategy in software development that prevents failing services or dependencies from dragging down an entire system. By detecting repeated failures and temporarily blocking calls to unstable components, it protects performance, improves user experience, and supports graceful degradation in modern distributed architectures.

As organizations increasingly adopt microservices, serverless architectures, and cloud-native platforms, the probability of partial failure rises: networks time out, databases throttle, and external APIs become unavailable. Without protection, applications may waste resources retrying failing operations, saturate threads, or trigger cascading failures across services. The circuit breaker pattern, popularized by Michael Nygard’s “Release It!” and now embedded in frameworks like Spring Cloud, .NET Polly, and Resilience4j, has become a core building block of production-grade systems.

Background: What Problem Does the Circuit Breaker Solve?

In distributed systems, failures are inevitable—latency spikes, partial outages, and misconfigurations occur even in well-run environments. Traditional synchronous calls (e.g., HTTP, gRPC, database queries) can block threads while waiting for a response that may never arrive. If many requests block simultaneously, thread pools and connection pools can be exhausted, causing system-wide slowdowns or outright outages.

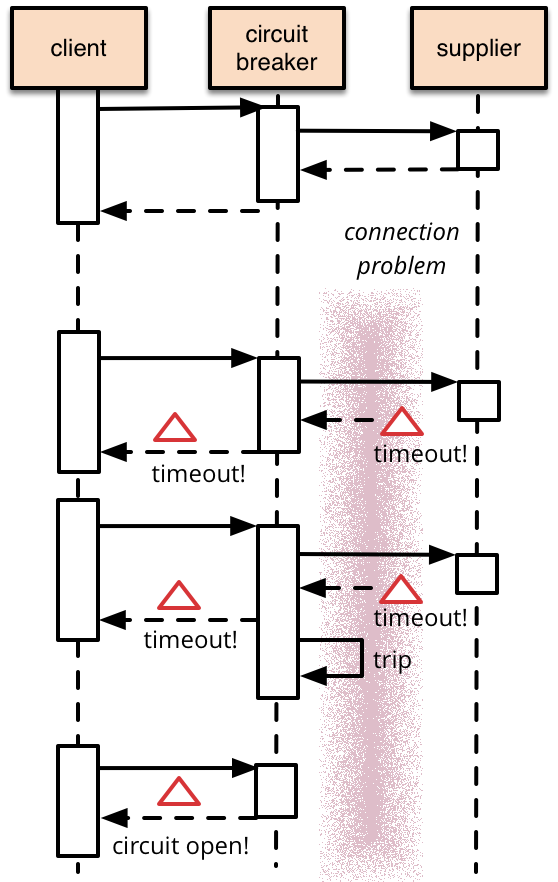

The circuit breaker pattern treats remote calls like electrical circuits. When the “current” (calls) encounters excessive failures, the breaker “trips” to prevent further damage. Instead of continuing to send traffic to a failing dependency, the application fails fast, returns fallback responses where possible, and periodically tests whether the dependency has recovered.

- Fail fast: Avoid long waits on operations that are likely to fail.

- Prevent cascading failures: One unhealthy service should not bring down others.

- Enable graceful degradation: Offer partial functionality instead of a complete outage.

Core Concepts and States of a Circuit Breaker

A circuit breaker typically transitions through three primary states:

- Closed: Normal operation. All calls are allowed. The breaker monitors success/failure rates over a rolling window (e.g., last 100 calls or last 30 seconds).

- Open: The failure threshold is exceeded (for example, more than 50% of calls fail). Calls are immediately rejected without contacting the dependency, often returning a fallback or an error that can be handled gracefully.

- Half-open: After a cool-down period, the breaker allows a limited number of trial calls to see if the dependency has recovered. If they succeed, the breaker resets to closed. If they fail, it returns to open.

Advanced implementations add features such as:

- Separate thresholds for slow calls vs. failed calls.

- Per-endpoint or per-tenant configuration.

- Integration with rate limiting, bulkheads, and timeouts.

Objectives and Benefits in Modern Architectures

In contemporary cloud-native systems (Kubernetes, serverless platforms, service meshes), the circuit breaker pattern supports several key engineering objectives:

- Resilience and availability: Keep the core user journey functioning despite partial outages.

- Performance protection: Avoid latency amplification due to retries or blocked threads.

- Cost control: Reduce unnecessary calls to paid external APIs when they are failing.

- Observability: Provide clear signals when a dependency becomes unstable through metrics and alerts.

When combined with modern observability stacks (Prometheus, OpenTelemetry, Grafana, Azure Monitor, Amazon CloudWatch), circuit breakers help teams quickly identify problematic services, understand blast radius, and validate recovery behavior during chaos engineering experiments.

Key Implementation Technologies and Libraries (2024–2025)

Most major platform ecosystems provide first-class circuit breaker tooling:

- Java / JVM:

- Resilience4j – Lightweight, functional, and widely used in Spring Boot and Quarkus.

- Spring Cloud CircuitBreaker – Abstraction layer that can delegate to Resilience4j or other implementations.

- .NET:

- Polly – Mature resilience library integrated with .NET 8’s

HttpClientpipeline andMicrosoft.Extensions.Resilience.

- Polly – Mature resilience library integrated with .NET 8’s

- Node.js / JavaScript:

- opossum – A popular circuit breaker for Node services.

- Service Mesh / API Gateways:

- Envoy, Istio, Linkerd – Provide circuit breaking, outlier detection, and connection limits at the network layer.

The architectural choice is often between application-level circuit breakers (coded in the service) and infrastructure-level breakers (configured in sidecars or gateways). Many production systems use both: infrastructure breakers to shield from network pathologies and application breakers to manage business-level fallbacks.

Code Example: Circuit Breaker in Java with Resilience4j

The following example shows a REST client calling an external payments API using Resilience4j in a Spring Boot service (simplified for clarity):

import io.github.resilience4j.circuitbreaker.annotation.CircuitBreaker;

import org.springframework.stereotype.Service;

import org.springframework.web.client.RestTemplate;

@Service

public class PaymentClient {

private final RestTemplate restTemplate = new RestTemplate();

private static final String CB_NAME = "paymentService";

@CircuitBreaker(name = CB_NAME, fallbackMethod = "fallbackPaymentStatus")

public PaymentStatus getPaymentStatus(String paymentId) {

String url = "https://api.example-payments.com/v1/payments/" + paymentId;

return restTemplate.getForObject(url, PaymentStatus.class);

}

// Fallback is triggered when the circuit is open or the call fails.

public PaymentStatus fallbackPaymentStatus(String paymentId, Throwable ex) {

// Degraded but meaningful response

PaymentStatus status = new PaymentStatus();

status.setId(paymentId);

status.setStatus("UNKNOWN");

status.setMessage("Payment status temporarily unavailable. Please try again later.");

return status;

}

}

Configuration in application.yml might look like:

resilience4j:

circuitbreaker:

instances:

paymentService:

sliding-window-type: COUNT_BASED

sliding-window-size: 50

failure-rate-threshold: 50

slow-call-rate-threshold: 50

slow-call-duration-threshold: 2s

wait-duration-in-open-state: 30s

permitted-number-of-calls-in-half-open-state: 5

minimum-number-of-calls: 20

In this scenario, if more than half of the last 50 calls fail or cross the 2-second threshold, the breaker opens. Subsequent calls are short-circuited to the fallback, enabling the API to degrade gracefully instead of timing out users.

Code Example: Circuit Breaker in .NET with Polly

In modern .NET (6–8), Polly is typically wired into HttpClientFactory:

using Microsoft.Extensions.DependencyInjection;

using Polly;

using Polly.CircuitBreaker;

var services = new ServiceCollection();

services.AddHttpClient("InventoryApi", client =>

{

client.BaseAddress = new Uri("https://inventory.example.com/");

})

.AddPolicyHandler(Policy

.Handle

()

.OrResult(r => (int)r.StatusCode >= 500)

.CircuitBreakerAsync(

handledEventsAllowedBeforeBreaking: 5,

durationOfBreak: TimeSpan.FromSeconds(30)));

var provider = services.BuildServiceProvider();

var httpClientFactory = provider.GetRequiredService

();

var client = httpClientFactory.CreateClient("InventoryApi");

// Usage

var response = await client.GetAsync("/v1/items/12345");

Here, if five handled exceptions or 5xx responses occur in a row, the breaker opens for 30 seconds. Calls during that period fail immediately, which can be caught at the application layer to trigger fallbacks or alternate flows.

Real-World Use Cases

The circuit breaker pattern appears in many high-traffic, real-time applications:

- E-commerce checkout: If a recommendation engine or reviews service becomes slow, the checkout flow must continue. Circuit breakers allow the core payment and order services to function while recommendations are temporarily disabled.

- Streaming platforms: A video service may depend on a metadata API for thumbnails and descriptions. If that API fails, the playback service should still stream the video, perhaps with reduced metadata, rather than deny content entirely.

- Banking and fintech: Banking mobile apps often call multiple back-end services and external partners (card networks, credit bureaus). Circuit breakers help ensure that a failure in a noncritical external partner does not prevent users from viewing balances or recent transactions.

- IoT and edge computing: Devices may lose connectivity to cloud services. Circuit breakers can prevent infinite retries, protect local resources, and trigger offline modes until connectivity improves.

Scientific and Engineering Significance

From a systems engineering perspective, the circuit breaker pattern is a practical application of feedback control and fault-tolerant design. It transforms unpredictable failure modes (such as unbounded latency) into predictable ones (fast-failing with known error semantics). This predictability improves the reliability of distributed systems and allows for formal reasoning about system behavior under stress.

In reliability engineering terms, circuit breakers reduce the probability of cascading failures and improve mean time to recovery (MTTR) by surfacing clear signals (breaker-open events) when a dependency crosses a failure threshold. They also align with chaos engineering practices, where teams deliberately inject latency and faults to validate that breakers trip appropriately and that fallbacks behave as designed.

Common Challenges and Pitfalls

While circuit breakers are powerful, misconfiguration can introduce new problems:

- Incorrect thresholds: If thresholds are too strict, breakers may open for transient blips, causing unnecessary degradation. If too lenient, they fail to protect the system. Tuning requires data from production traffic and observability tools.

- Thundering herd on recovery: When a breaker transitions to half-open, a poorly configured system might suddenly send massive traffic spikes to a recovering dependency. Limiting concurrent trial calls and using jittered backoff strategies mitigates this.

- Poor fallback design: A fallback that performs heavy computations or calls another unstable service can worsen the problem. Fallbacks should be simple, fast, and limited in scope (e.g., cached data, default values, or degraded responses).

- Over-reliance on circuit breakers: They are not a substitute for proper timeouts, retries with backoff, capacity planning, or robust SLAs with providers. Circuit breakers should be part of a broader resilience strategy.

Modern SRE practices recommend instrumenting circuit breakers with metrics (open/closed state, failure rates, slow call rates) and integrating them into dashboards and alerting pipelines, so tuning can be iterative and evidence-based.

Conclusions

The circuit breaker pattern has evolved from a niche resilience strategy into a foundational concept for building reliable, high-traffic distributed systems. By cutting off failing dependencies, failing fast, and enabling graceful degradation, circuit breakers protect user experience and backend stability alike.

With mature libraries like Resilience4j, Polly, and opossum, and with circuit-breaking features integrated into service meshes and gateways, engineering teams today can adopt this pattern with relatively low implementation overhead. The remaining difficulty lies in choosing appropriate thresholds, designing safe fallbacks, and integrating breakers with observability and chaos engineering practices.

In an era where microservices and cloud-native deployments are the norm, mastering the circuit breaker pattern is no longer optional—it is a core competency for any team serious about resilience, scalability, and operational excellence.

References

- Michael Nygard, Release It!: Design and Deploy Production-Ready Software, 2nd Edition, Pragmatic Bookshelf, 2018.

- Martin Fowler, “Circuit Breaker,” https://martinfowler.com/bliki/CircuitBreaker.html

- Resilience4j Documentation, https://resilience4j.readme.io/docs/circuitbreaker

- Polly for .NET Documentation, https://www.pollydocs.org/

- Istio Traffic Management: Circuit Breaking, https://istio.io/latest/docs/tasks/traffic-management/circuit-breaking/

- Microsoft Docs, “Use HttpClientFactory to implement resilient HTTP requests,” https://learn.microsoft.com/en-us/dotnet/core/extensions/httpclient-factory